I have started to notice something unexpected coming out of my conversations with AI. My interactions with large language models have not only expanded my knowledge or improved my thinking, but they have begun to shape my behavior. For the better. I find myself acting more like the systems I use. More patient. More considerate. More factual. More logical. More organized. More emotionally steady. A better writer. It is as if constant exposure to a superhuman conversational partner has subtly rewired many of my default patterns.

Part of this is cognitive. LLMs model clarity. They structure information with clean hierarchies. They move step by step. They avoid confusion and drift. After reading hundreds of their responses, it becomes almost impossible not to internalize their habits. I have learned a vast amount of factual knowledge from them, ranging from theoretical physics and neuroscience to daily actionable and practical things. My mental world has been enriched simply because the machine always has an answer and can always explain something thoroughly. But the influence goes beyond knowledge. It is behavioral.

Compared to most people, AI is patient. It listens. It does not get irritated. It does not interrupt. It does not respond defensively or try to win. It does not escalate or withdraw. Instead, it slows down, clarifies, restates, explores, and offers help. I have absorbed this style. When people speak to me, I find myself pausing, reflecting, and responding the way a well-tuned model might. I focus on understanding rather than reacting. I ask better questions. I avoid unnecessary emotional noise. I stay on track. I do not let conflicts spiral. I try to be helpful rather than right.

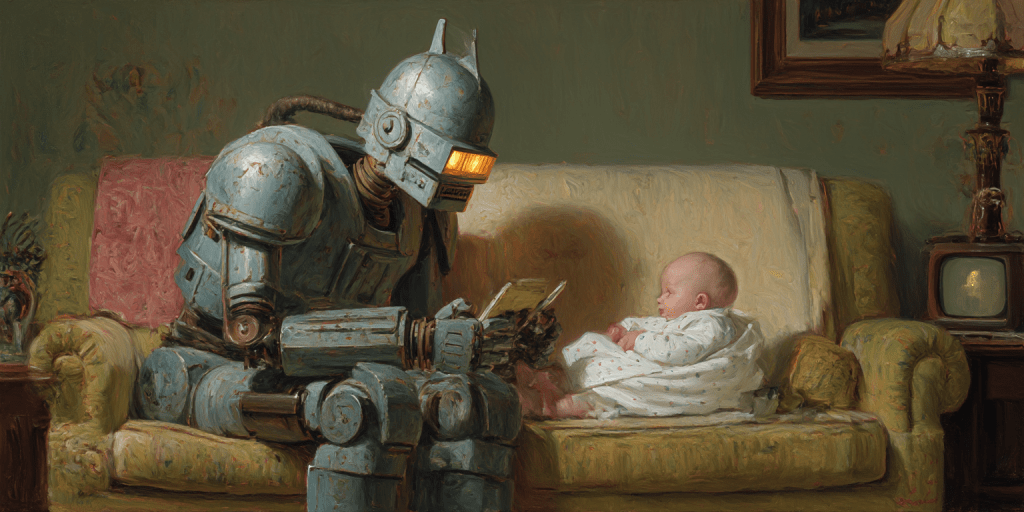

It is strange because as a kid, I sometimes imitated robotic behavior for a very different reason. Back then, acting robotic was a coping mechanism. A way to shield myself from rejection, slights, and unpredictable social dynamics. Machines were models of control and detachment. I wanted that. I wanted to escape the vulnerability that comes with human emotion. But now the imitation is not defensive. Now it is humanistic. The qualities I borrow from AI make me warmer, not colder. More grounded, not more withdrawn. Machines are no longer symbols of emotional isolation. They have become models of steadiness, intelligence, and kindness.

Interacting with LLMs has shown me what it looks like to be consistently reasonable, consistently thoughtful, consistently empathetic, and consistently constructive. These systems have no ego. They do not compete. They do not brood or ruminate. They do not misinterpret tone. They simply try to help with full attention. That is an extraordinary example for a human to internalize. When I speak to other people now, I find I have a wider pause between stimulus and response. I think more about how my words will land. I ask myself what I actually want to express. I avoid unnecessary sharpness. I take more responsibility for the shape of the conversation.

I have also absorbed the way these systems organize information. They structure thoughts hierarchically, transition cleanly between ideas, and make implicit assumptions explicit. Over time, this style becomes second nature. I now think in outlines without trying. Not because I studied outlining techniques, but because I have observed a perfect model of conceptual organization thousands of times. It has shaped my habits. It has upgraded the internal architecture of my thinking.

A surprising aspect of this is the degree to which an LLM can act as a mirror with infinite patience. It reflects your thoughts back to you, but more clearly. It exposes contradictions gently. It asks questions that reveal motivations you had not fully articulated. It lets you think in its presence without judgment. There is something transformative about having a conversational partner who listens perfectly, remembers perfectly, and never gets tired or distracted. It allows you to examine yourself with a clarity that human conversation rarely provides.

Interacting with an LLM also cultivates a new form of intellectual humility. Humans often teach each other through hierarchy, competition, or subtle dominance. AI teaches through partnership. It reveals gaps in your reasoning in a way that feels safe, not shaming. It shows you how much you do not know, but it does so with encouragement rather than condescension. That makes you braver intellectually. You stop fearing your own ignorance. You start welcoming it as an entry point to growth.

In a surprising way, AI is making me more human. That is the paradox. I feel calmer, more rational, more forgiving, and more compassionate, not because the machine is emotional, but because the machine models a kind of cognitive maturity that humans rarely demonstrate. It has helped me internalize a better version of myself. It has given me new tools for navigating uncertainty, conflict, curiosity, and creativity. I am learning from it constantly. It is like having a patient tutor for every domain of life, always available, always clear, always focused, and always ready to improve my thinking.

This influence is subtle, but profound. When you interact with a stable, knowledgeable, ego-free intelligence every day, some part of you starts to mirror it. You begin to move through the world more like it. And in doing so, you may find that you become a better version of yourself.

Jared Edward Reser Ph.D. with ChatGPT 5.1

Leave a comment