Abstract

Qualia is often treated as a static property attached to an instantaneous neural or computational state: the redness of red, the painfulness of pain. Here I argue that this framing misidentifies the explanatory target. Drawing on the Iterative Updating model of working memory, I propose that a substantial portion of what we call qualia, especially the felt “presence” of experience, is a temporal-architectural artifact: it arises from the way cognitive contents are carried forward, modified, and monitored across successive processing cycles. The core mechanism is partial overlap between consecutive working states, producing continuity without requiring a continuous substrate. I then add a second ingredient, transition awareness: the system’s current working state contains usable information about its own recent updating trajectory, allowing it to regulate, correct, and stabilize ongoing thought. On this view, consciousness is not merely iterative updating, but iterative updating that is tracked by the system as it unfolds. Finally, I treat self-consciousness as a special case of this same machinery, in which a subset of variables is stabilized across updates as enduring invariants, anchoring ownership and agency within the stream. This framework reframes the hard problem by shifting attention from timeless “qualitative atoms” to temporally extended relations among states, and it yields empirical predictions. Qualia-related reports should covary with measurable parameters such as overlap integrity, update cadence, monitoring depth, and invariant stability, providing a path toward operationalizing aspects of subjective experience in both neuroscience and machine architectures.

Section 1. The problem as usually framed, and why it stalls

Philosophical discussion of qualia tends to begin with an intuition that feels both obvious and irreducible: there is something it is like to see red, to feel pain, to hear a melody, and no purely third-person description seems to capture that first-person fact. From this starting point, a familiar structure appears. On one side are approaches that treat qualia as fundamental, perhaps even as a primitive feature of the universe. On the other side are approaches that treat qualia as a kind of cognitive illusion, a user-interface story the brain tells itself. In between sit families of functionalist and representational views that try to keep experience real while insisting it is fully grounded in what a system does.

The debate stalls, in my view, because the term qualia functions like a suitcase word. It does not refer to one problem. It packages several. The vivid sensory character of experience is one part. The unity of experience, the fact that the world appears as a coherent scene rather than a shuffled deck of fragments, is another. The continuity of experience, the fact that there is a temporally thick “now” rather than a sequence of disconnected instants, is another. And then there is ownership, the sense that experience is present to someone, and present as mine. When we ask “why is there something it is like,” we are often asking about all of these at once, and then treating the bundle as if it were a single indivisible mystery.

There is a second, quieter reason the debate stalls. Much of the philosophical literature implicitly treats experience as if it were a snapshot. Even when philosophers acknowledge the specious present, the mechanisms under discussion are typically framed as properties of a state at a time. But lived experience is not most naturally described as a mathematical instant. The present we actually inhabit has duration. It has inertia. It has carryover. It has direction. A theory that tries to explain experience while ignoring the temporal structure that makes the present feel like a present is likely to be forced into metaphysical inflation, because it is leaving explanatory work on the table.

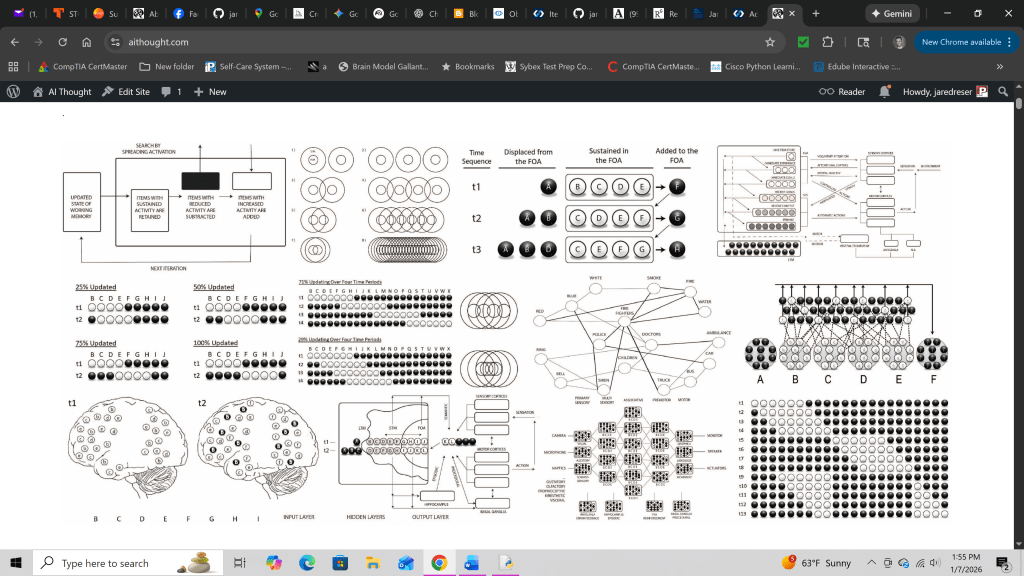

My goal here is not to solve the entire problem of qualia in one stroke. It is to propose a narrower and more tractable strategy. Instead of beginning with the most ineffable aspect of qualia, I begin with the temporal architecture that makes experience continuous and present at all. I laid this out in my model of consciousness at:

aithought.com

I then argue that what we call consciousness, in the sense of presence, may involve a system that not only updates its working contents over time but also tracks that updating as it occurs. This turns part of the qualia discussion into an architectural question. Under what temporal and computational conditions does a stream of updating become a stream that is lived?

Section 2. Iterative updating as the continuity substrate

The core architectural idea is simple. Working memory is not replaced wholesale from moment to moment. It is updated. In each cycle, some portion of the currently active content remains, some portion drops out, and some new content is added. This is not merely a convenience. It is a structural constraint with phenomenological consequences. If a system’s present state contains a nontrivial fraction of the immediately prior state, then the system carries its own past forward as a constituent of its current processing. Continuity is built into the physics of the computation.

Once this is stated plainly, the specious present looks less like a philosophical puzzle and more like an expected property of overlapping updates. The “now” is not a point. It is the short interval over which remnants of the previous state and elements of the emerging state coexist and interact. Subjectively, this coexistence can feel like temporal thickness. Mechanistically, it is the overlap region in which decay and refresh are simultaneously present. If the overlap were zero, cognition would be frame-by-frame. If the overlap were near total, cognition would become sticky, dominated by inertia rather than responsiveness. In between is a regime that supports smooth transitions: enough persistence to bind time together, enough turnover to incorporate new information and move.

This kind of overlap also offers a natural basis for the unity of experience. When multiple representational elements remain coactive across successive updates, they can constrain one another over time. The system does not simply display a series of unrelated contents. It maintains a structured constellation long enough for relationships among its elements to be tested, revised, and stabilized. In that sense, iterative updating is not only a memory mechanism. It is an information-processing mechanism. It is a way of letting a set of simultaneously held items do work together, and of letting that work continue across short spans of time instead of resetting at every step.

Importantly, none of this yet requires a claim about metaphysical ingredients. It is a claim about architecture. It says that if you want a temporally continuous mind, you should look for a temporal continuity constraint in the underlying processing. The overlap itself is not a complete theory of consciousness, but it may be a necessary substrate for the specific features of consciousness that are most obviously temporal: the feeling of flow, the felt presence of an extended now, and the stability that allows a moment to be experienced as part of an ongoing scene.

Section 3. Transition awareness: when continuity becomes presence

Up to this point, the story is about a substrate: overlapping updates create temporal continuity. But continuity is not identical to presence. A system can exhibit overlap in its internal dynamics and still fail to have anything like subjective availability, in the ordinary sense in which a mental episode is present to the organism. This is where many theories quietly smuggle in an observer, a global workspace “reader,” or a higher-order monitor that watches the stream from outside. My preference is to avoid that move. If a monitoring function is required, it should be implemented as part of the same iterative machinery, not as an additional homunculus.

The key proposal is that consciousness, in the sense of presence, arises when the iterative updating process is not merely occurring but is being tracked by the system as it occurs. In other words, the system’s current working state includes not just representational content, but a usable representation of change. It contains information that a transition is underway, what has been retained, what has been lost, and what is being incorporated. This is not mystical. It is a familiar engineering pattern: a process that exposes its own internal state to itself can do more robust control, error correction, and planning than a process that only emits outputs.

If this is correct, then qualia-like presence is less like a static glow attached to a percept and more like an active relation between successive states. The system does not merely have a red representation. It has a red representation whose arrival, persistence, and integration are being handled in a structured way by the very process that is updating the workspace. The experience is not only the content but the content-as-it-is-being-carried-forward.

There is a useful way to say this without overreaching. Consciousness is not the iterative updating itself, because many biological and artificial processes update iteratively without anything we would call experience. Rather, consciousness is iterative updating plus transition awareness: the system maintains an accessible, functionally relevant trace of its own recent updating trajectory. The trace can be minimal. It does not have to be a narrative. It can be a structured sensitivity to what has changed. But it is crucial that the system can use this information to guide the next update. When the system is sensitive to its own transitions, it is not merely moved along by dynamics. It is, in a sense, present to those dynamics.

This framing has two advantages. First, it offers a plausible reason why experience feels temporally thick. The “now” is not just overlap. It is overlap that is being actively negotiated. Second, it links phenomenology to control. Presence becomes the experiential face of a self-updating controller that must remain online to keep the stream coherent. A purely feedforward system can produce outputs, but it cannot be present to the way it is transforming itself in time. A transition-aware system can.

One implication is that consciousness should scale with the degree to which transition information is accessible and used. A system may carry forward overlap but fail to track it. In that case, it may behave in ways that look coherent while lacking a robust sense of presence. Conversely, a system may track transitions deeply, and in doing so gain a richer sense of being in the middle of an unfolding process. This gives us a principled way to talk about gradations without asserting that everything is either a zombie or a full subject.

Section 4. Self as a stabilized set of invariants within the stream

If presence is the system tracking the updating, self-consciousness is the system tracking itself within what is being updated. The self is not a separate object added to experience. It is a set of stable variables, constraints, and reference points that remain active, or remain easily reinstated, across successive updates and thereby anchor the stream. In lived life, these invariants include body-relevant signals, enduring goals, social commitments, autobiographical expectations, and the persistent sense that this stream belongs to one agent.

This can be stated in an architectural way. The iterative updating process continuously selects which elements will remain in the workspace and which will be replaced. If certain elements are repeatedly retained or rapidly reintroduced, they become quasi-permanent constraints. They function like a coordinate system. They define what counts as relevant, what counts as threatening, what counts as mine. Over time, these constraints produce a stable center of gravity. The “self” is the name we give to that center of gravity as it is maintained across the stream.

In this view, self-reference is not primarily conceptual. It is operational. When the system models the likely consequences of an action, it must do so relative to its own body, its own goals, and its own expected future states. That requires keeping certain self-related parameters online across updates. When those parameters are stable, the stream feels owned. When they are unstable, the stream can remain continuous but lose its familiar sense of ownership. This is one reason depersonalization and derealization are so philosophically important. They suggest that continuity of experience and ownership of experience can come apart, at least partially, which is exactly what an architectural decomposition would predict.

This also suggests that the self is graded and modular. Not every self-variable has to be online at every moment. The body schema may be present while autobiographical narrative is not. Goals may be vivid while social identity fades. In everyday life, we slide around in this space. In stress, fatigue, anesthesia, meditation, or certain clinical states, the distribution shifts. A theory that equates self-consciousness with a single module will struggle to accommodate these shifts. A theory that treats the self as a set of invariants stabilized across iterative updating can accommodate them naturally.

Finally, this offers a clean way to relate self-consciousness to transition awareness. If presence is tracking the updating, self-consciousness is tracking the updating while treating some of the tracked variables as self-defining. The system is not only aware that the stream is unfolding. It is aware that it is the locus of unfolding, because certain constraints persist and are tagged, implicitly or explicitly, as belonging to the same continuing agent. The self, on this account, is the temporal binding of agency-related constraints.

Section 5. From mystical properties to architectural variables

The preceding claims can be summarized as a layered proposal. Iterative updating provides a continuity substrate by partially overlapping successive working states. Transition awareness provides presence by making the updating trajectory accessible to the system’s own control process. Self-consciousness provides ownership by stabilizing a subset of variables as enduring invariants within that trajectory. This is not meant as a rhetorical flourish. It is meant as a shift in the type of explanation. If we can specify these layers, then at least some components of qualia become architectural artifacts rather than metaphysical primitives.

The practical value of this shift is that it encourages us to define variables. Even if we cannot yet measure them perfectly, we can describe what would count as evidence for them. The first variable is overlap integrity: how much of state A remains functionally active in state B, and for how long. The second is update cadence: the typical rate at which new elements are introduced and old elements are removed, and how that rate changes under different conditions. The third is monitoring depth: the degree to which the system’s current state contains usable information about its own recent transition history, not merely about the external world. The fourth is invariant stability: how reliably certain self-relevant constraints are maintained or reinstated across time.

These variables motivate what I have called an informational viscosity view. In a low-viscosity system, states are discrete and do not significantly bleed into one another. In a high-viscosity system, states persist and constrain the next state strongly. Conscious experience, on this view, is most likely in a middle regime: enough viscosity to produce a temporally thick present and stable ownership, but not so much that the system becomes stuck. The qualitative feel of experience may then be partly determined by where the system sits in that regime and how effectively it monitors its own transitions.

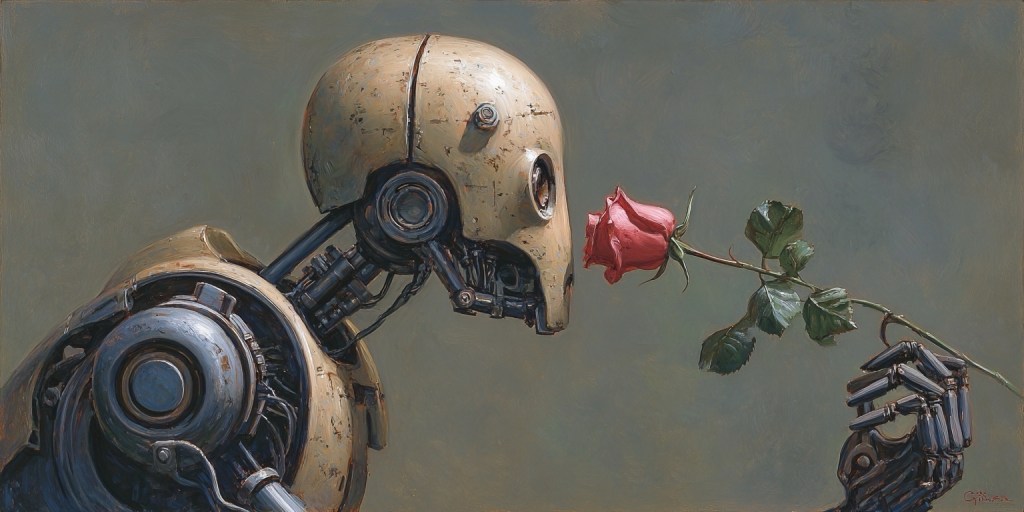

This way of speaking also offers a cautious bridge to questions about machine qualia. I do not claim that any particular artificial system is conscious or that continuity metrics alone settle the issue. But the framework suggests a more precise research question than “can silicon feel.” It suggests that a system’s “qualia potential,” whatever one thinks of that phrase, would be expected to increase as it exhibits robust state overlap, transition awareness, and stable self-invariants. This turns a metaphysical standoff into an engineering hypothesis: if we build systems with these temporal properties and they begin to show markers of unified, self-stabilized processing, we will at least have moved the debate into a domain where evidence can accumulate.

A final point is worth stating plainly. Nothing here fully explains why red has the character it has. The sensory-specific character of experience remains difficult. What this framework tries to do is clarify which parts of the qualia problem are plausibly addressed by temporal architecture. Continuity, presence, and ownership are not minor features. They are central to what people mean when they speak about the “feel” of being conscious. If we can explain those in a principled way, we have reduced the explanatory gap, even if we have not fully closed it.

Section 6. Empirical and clinical predictions

A theory gains credibility when it predicts dissociations, not just correlations. The layered structure proposed here implies that continuity, presence, and selfhood can vary somewhat independently. This matters because it yields specific predictions across stress, anesthesia, cognitive load, and altered states.

First, continuity should covary with overlap integrity. Conditions that reduce persistence or disrupt partial carryover should increase reports of fragmentation, temporal disorientation, and context-loss errors. Conditions that increase persistence excessively should produce perseveration, intrusive carryover, and a sense of cognitive stickiness. Importantly, these changes need not map neatly onto performance. A person may perform adequately while experiencing reduced temporal thickness, especially if compensatory routines are available.

Second, presence should depend on transition awareness, not merely on content. If monitoring depth is reduced, one would expect a decrease in metacognitive clarity and a thinning of “for-me-ness” even when perception and behavior remain relatively intact. This suggests that certain anesthetic or dissociative states might preserve processing of stimuli while degrading the feeling of being present to one’s own processing. Conversely, tasks that force a person to track internal changes, rather than merely detect external targets, should amplify felt presence if transition awareness is a real contributor.

Third, selfhood should track invariant stability. Depersonalization and derealization should correlate with disruptions in the maintenance of self-relevant constraints across updates, even when the perceptual scene remains coherent. The model predicts that when self-invariants become unstable, the stream can remain continuous while feeling unowned, distant, or unreal. This is a philosophically valuable dissociation because it suggests that ownership is not identical to continuity.

Fourth, stress should compress the system toward reactive updating. Under stress, people often report being less able to “hold the whole situation in mind,” more prone to snap judgments, and more vulnerable to context mixing. On this framework, stress reduces both overlap integrity and transition monitoring by pushing the system toward faster, less controlled updating and by destabilizing the maintenance of invariants. This yields a concrete prediction: stress should selectively degrade tasks that require maintaining a stable constellation over multiple steps, especially when self-relevant variables must be integrated with external cues.

Fifth, working-memory load should reduce the perceived richness of experience via compression. As the system approaches capacity, it should rely more heavily on coarse summaries and categorical representations. Subjectively, this may feel like a narrowing of experience, not necessarily because sensory input is absent, but because the system cannot sustain enough structured overlap to preserve nuance. This prediction aligns with ordinary introspection: when overloaded, we remain awake, but the present feels thin and schematic.

Finally, flow states should represent a favorable regime of viscosity and monitoring. In flow, task-relevant invariants remain stable, updates proceed smoothly, and the system remains tightly coupled to its own transitions without excessive self-interruption. The phenomenology of flow, a sense of continuous agency and clarity, fits the expectation of high-quality overlap plus effective transition awareness.

Section 7. Limits, objections, and what this model claims

A common objection to any architectural account is that it explains structure without explaining “the glow,” the intrinsic feel of particular sensory qualities. I agree that the present proposal does not fully explain sensory-specific character. It does not claim that overlap alone turns computation into redness or turns dynamics into pain. What it does claim is that several central features of what people call qualia are fundamentally temporal: continuity, presence, and ownership. Those features are not optional decorations on experience. They are the stage on which sensory qualities appear as lived.

A second objection is that this approach risks collapsing into a sophisticated functionalism, and functionalism is often accused of leaving the explanatory gap untouched. The best response is to admit what functionalism can and cannot do, and then be specific about the gain. The gain here is not that we have derived qualia from logic. The gain is that we have decomposed a monolithic mystery into components with architectural signatures. Even if one remains a dualist about sensory character, one can still accept that continuity and ownership depend on specific temporal constraints. That is progress, because it turns parts of the debate into a research program rather than a metaphysical stalemate.

A third objection is that many non-conscious processes are iterative and self-referential, so why should tracking iterative updating yield consciousness. The answer is that the proposal is not “any recursion equals consciousness.” It is a claim about a particular kind of recursion: a system that (1) maintains a limited working set with partial overlap across time, (2) uses that overlap to form temporally extended constraints, and (3) makes transition information available to guide subsequent updates. That combination is more specific than generic recursion, and it is closer to what brains appear to do when they are awake and coherent.

Where does this leave the hard problem. I do not think it dissolves it in one step. But it changes the terrain. It suggests that a large part of what makes qualia feel irreducible is that philosophers have been looking for a static property when the relevant object is a temporal structure. If experience is in significant part the lived tracking of ongoing updating, then the right explanatory target is not an instantaneous state description. It is a dynamical account of how a system binds itself to its own immediate past, monitors its own transitions, and stabilizes a self within that stream.

In that sense, the most important shift is from “where does qualia come from” to “under what temporal conditions does a system’s processing become present to itself.” That is a question that can be sharpened, modeled, and tested. It belongs equally to philosophy of mind and to the engineering of future artificial systems that aim to be more than discrete sequence predictors.