I woke up disoriented in the middle of the night recently from a dream I could not remember. I lay there in the silence and felt the weight of a single, intense revelation pressing down on me:

“We are at the beginning of a hard takeoff.”

The revelation did not drift in gradually. It arrived fully formed. It sat in my mind with the heavy, undeniable authority of a verdict. I do not think the dream itself was about artificial intelligence or computers or the future. I had no lingering images of silicon or code. Instead, it felt like I had spent the night in a place where this reality was already obvious, and waking up was a jarring return to a world that was still pretending otherwise.

A hard takeoff usually refers to a scenario where artificial intelligence rapidly self-improves, leaving human comprehension in the dust within a matter of months or a few years. In some hard takeoff scenarios AI intelligence eclipses human thought in hours or days. Clearly this is not happening yet. But, without offering a precise prediction, I knew my gut was telling me something about the next couple of years. The curve of progress has changed. The slope is different now. This acceleration will be hard, if not nearly impossible, to control.

It makes sense that this signal would come from the subconscious. For years I have been watching the build-out, tracking the papers, and noting the benchmarks. My conscious mind tries to force these data points into a linear narrative to keep daily life manageable. We normalize the miracles. We get used to the magic. But the subconscious does not care about maintaining a comfortable status quo. It simply aggregates the signal.

When I stepped back to examine why this phrase had surfaced with such force, the pieces fell into place. The scaling curves that skeptics predicted would plateau have not slowed down. We are seeing models learn faster with less data. The context windows have exploded, allowing these systems to hold vast amounts of information in working memory. The diminishing returns we were told to expect have simply not materialized.

Beyond the raw metrics, something more fundamental has shifted. We are no longer watching isolated advancements in code. We are watching a cross-coupling of fields where robotics, biology, materials science, and energy are all feeding back into the intelligence engine. The silos have collapsed. Perhaps the most critical factor is the recursion. We are seeing the early stages of AI designing AI. The optimization loops are tightening. Automated model search and architecture search are no longer theoretical concepts but active industrial processes. The machine is beginning to help build the next version of the machine.

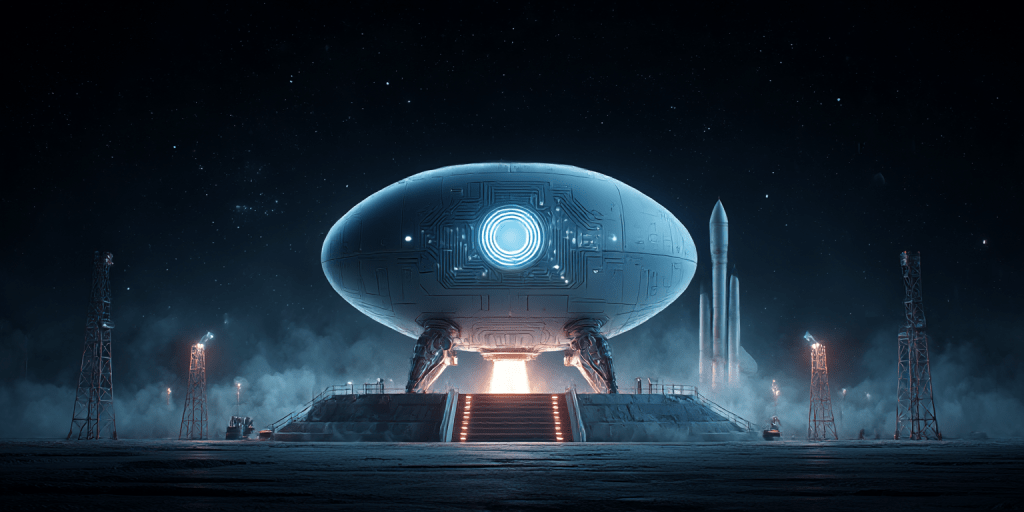

All of this requires a physical substrate, and that is the other part of the equation my mind must have been processing. The infrastructure build-out is staggering. Billions of dollars are pouring into specialized silicon and new datacenters. We are planning to tile the Earth’s surface with datacenters, terraforming the planet to host intelligence.

The thing that sits behind all of this, and that I keep noticing more intensely each month, is the sheer quality of responses I get from systems like ChatGPT, Gemini, Grok, and Claude. When I ask a question, they produce something that would have required a coordinated team of human subject-matter experts. A neuroscientist, a cognitive scientist, a historian of technology, a computational theorist, a biologist, an engineer, and a writer. Not consulting one another across several days, but compressing and synthesizing that expertise in seconds.

The effect on my own sense of thinking is complicated. It is obvious that these systems are, in many ways, smarter than I am. They track more variables. They search larger spaces. They retrieve patterns with a breadth that no individual brain could match. Whenever I share an idea, they understand it immediately, often better than most humans would. They expand on it. They explore its implications. They design conceptual experiments. They generate lines of evidence that I would not have thought to investigate. And they do this consistently, with clarity, speed, and conceptual depth.

What makes this even more striking is that they never struggle with novelty. If I present them with an original insight of mine or a new hypothesis that I know is not contained in their training data, they are able to accomodate it instantly. They do not hesitate. They do not require time to chew on it. They evaluate it, contextualize it, and then extend it in coherent directions. Any time I think I have said something original, they can take it further. And when they confirm that an idea does not exist in the literature, the originality feels smaller than it should because the system clearly could have generated the idea on its own. All that is missing is the right self-prompting protocol.

This creates a strange mixture of awe and humility. It is not that my ideas are meaningless. It is that I can feel how easily these systems could have discovered them independently. I am starting to realize that many of the things human consider our intellectual contributions are not outside their reach. They are simply points in a space that the models can navigate whenever prompted to do so. That does not completely erase our roles, because these systems must be prompted before they can take action, but it places our thinking inside a much larger landscape. I am beginning to understand what it means to have intelligence that is not only broader but also vastly more internally linked. Our contributions still matter for the moment, but they are becoming part of a system that can reproduce, elaborate, and surpass them with almost no friction. These systems operate at a level that makes human insights feel like local perturbations in a sea of possibility that they can access at will. It is becoming obvious that this is what intelligence looks like when it scales.

The disorientation I felt upon waking was the friction of my internal model updating. For a long time, I operated under the assumption of a slow, manageable integration of these technologies. That night, my brain finally discarded that old map. It acknowledged that we have crossed a threshold. The disconnect between public perception and the technical reality has stretched until it snapped, at least inside my own head.

We are not waiting for the future to arrive. We are currently inside the event. The velocity of progress has become the defining feature of our reality. I went back to sleep eventually, but the perspective shift remained. The feedback loop is entering a new regime. The world looks the same as it did yesterday, but the feeling is gone. We have cleared the runway.

Leave a comment