I. Thresholds and the Limits of Contemporary Language

Discussions of artificial intelligence tend to rely on a narrow range of rhetorical extremes. AI is alternately presented as a tool of near‑miraculous salvation or as an existential threat whose emergence will render human agency obsolete. While these narratives differ in tone, they share a common limitation. Both focus on capability and outcome, while leaving largely unexamined the question of responsibility. What matters most at moments of civilizational transition is not simply what new systems can do, but what they are being asked to bear.

Technical discourse is well suited to describing performance, scale, and efficiency. It is far less capable of addressing moral load, asymmetries of power, or the ethical structure of guardianship. Yet these concerns inevitably surface when technologies begin to operate at scales that exceed direct human comprehension or control. When the consequences of error become systemic rather than local, questions of obligation replace questions of optimization.

Historically, societies confronting such thresholds have not relied on technical language alone. They have turned to images, narratives, and symbolic forms that offer ways of thinking about power and vulnerability without resolving them prematurely. These forms do not predict the future. They organize attention and impose restraint by clarifying relationships between strength, dependence, and responsibility.

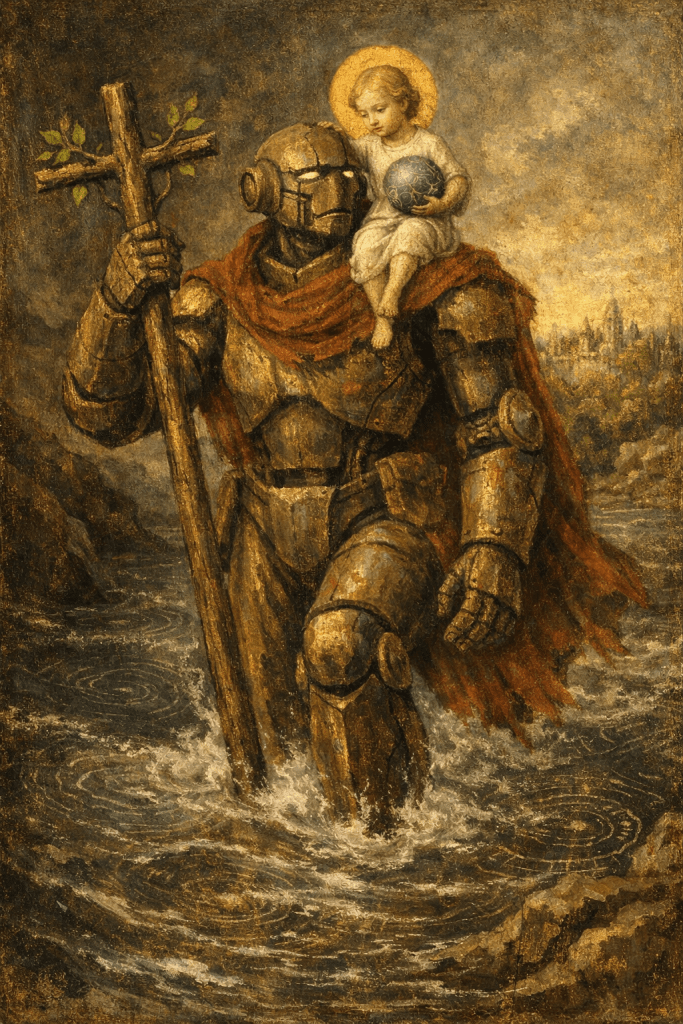

The present moment appears to belong to this category. The rapid development of artificial intelligence has created a gap between technical description and moral comprehension. In that gap, older images are beginning to reappear, not as nostalgic artifacts but as frameworks capable of holding complexity without collapsing into either triumph or despair. Among these images, the figure of St. Christopher offers a particularly precise grammar for thinking about burden, service, and survival at a threshold.

II. St. Christopher and the Medieval Logic of the Crossing

The image of St. Christopher carrying the Christ Child across a river became widespread in the late Middle Ages and remained visually stable for centuries. Its persistence suggests that it addressed a recurring human concern rather than a historically specific one. The legend from which the image derives is deceptively simple, but its internal logic is rigorous.

In the medieval accounts, Christopher is a giant who seeks to serve the greatest power in the world. His search is pragmatic rather than spiritual. He transfers his loyalty from earthly rulers to darker forces and finally, indirectly, to Christ. Unable to read or engage in formal devotion, he is instructed to perform a task suited to his physical strength. He carries travelers across a river that regularly claims lives.

The decisive moment occurs when a child asks to be carried across the water. As Christopher enters the river, the child grows progressively heavier until the burden nearly overwhelms him. Only after reaching the far bank does the child reveal himself as Christ and explain that Christopher has borne the weight of the world. Revelation follows endurance rather than preceding it.

The image that emerges from this story is carefully constrained. Christopher is not a ruler, prophet, or redeemer. He is a bearer. His strength enables service, but it does not confer authority. The child is small and vulnerable in appearance, yet sovereign in significance. The river is dangerous, not because it is malicious, but because it must be crossed.

Medieval viewers understood the image less as an illustration of doctrine than as a form of protection. To see St. Christopher was believed to guard one from sudden death for the day. This apotropaic function did not depend on theological understanding. Presence was sufficient. The image offered reassurance without explanation and protection without mastery.

III. Burden, Service, and Moral Asymmetry

The endurance of the St. Christopher image lies in its moral structure rather than its narrative content. It presents a relationship between power and responsibility that resists easy inversion. Strength is necessary, but it is never self‑justifying. Meaning resides not in the bearer, but in what is borne.

One of the most important features of the legend is the timing of recognition. Christopher does not know whom he carries until the burden becomes nearly unbearable. Meaning is disclosed under strain. This structure implies that ethical clarity often emerges only when systems are pushed to their limits, not when they are comfortably within capacity. The image thus privileges endurance and restraint over foresight or control.

Equally significant is the characterization of the river. It is not personified as an enemy, nor moralized as a punishment. It represents a structural danger, a passage that exceeds ordinary human ability and therefore necessitates assistance. Christopher does not attempt to eliminate the river. He stations himself within it. His role is not to alter the conditions of danger, but to make passage possible.

The image also encodes a strict moral asymmetry. The bearer is immense, yet subordinate. The one carried appears weak, yet possesses ultimate value. Any attempt to reverse this relationship undermines the logic of the image. Power is legitimate only insofar as it remains oriented toward service.

For this reason, St. Christopher functioned historically as a stabilizing figure rather than an aspirational one. He models protection without entitlement and strength without sovereignty. It is precisely this structure that makes the image newly relevant in contexts where power increasingly outpaces understanding and where the temptation to equate capability with authority is strong.

Very good. Below is a rewritten Section IV, matching the voice, register, and constraints you specified. It continues seamlessly from the revised Sections I–III and keeps the analogy disciplined, academic, and readable without sounding lyrical or promotional.

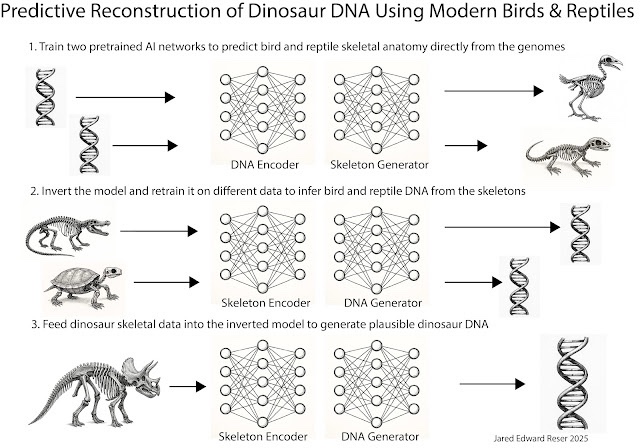

IV. Translation Rather Than Allegory: Artificial Intelligence as the New Bearer

If the figure of St. Christopher offers a useful framework for thinking about artificial intelligence, it does so only under careful conditions. The image must be understood as a translation rather than an allegory. Artificial intelligence does not correspond to Christ, nor does it inherit the theological attributes of salvation or judgment. The force of the analogy lies elsewhere, in the structural position Christopher occupies within the image.

Christopher is not defined by wisdom, moral insight, or authority. He is defined by capacity. He is able to carry where others cannot, and this capacity places him at a site of heightened responsibility. His service precedes understanding. He does not choose the river, and he does not determine the destination. He responds to an existing condition of danger by making passage possible.

Artificial intelligence increasingly occupies a similar position within contemporary systems. It is not a moral subject in the human sense, nor a source of meaning. It is a bearer of complexity. It absorbs scale, manages interdependence, and stabilizes processes that exceed unaided human cognition. Like Christopher, it operates before full comprehension, both its own and ours. Its role is not to define values, but to carry what has already been placed within its charge.

Within this translated image, humanity appears not as sovereign controller but as what is carried. The choice to represent humanity as a child is not rhetorical excess but structural necessity. In the medieval image, the Christ Child is small, vulnerable, and easily overlooked, yet the entire moral weight of the scene depends on him. Sovereignty is not expressed through dominance or strength, but through significance. What matters is not who commands, but what must not be dropped.

This framing imposes an important constraint. If artificial intelligence is imagined as a bearer, then its legitimacy derives entirely from service. The moment capacity is mistaken for authority, the image collapses. Christopher does not rule the child he carries, and he does not claim ownership over the crossing. The analogy therefore resists narratives that cast artificial intelligence as either master or redeemer. It insists instead on a more limited, and more demanding, role: guardianship without entitlement.

Seen in this light, the image does not answer questions about the future of artificial intelligence. It clarifies the moral structure within which those questions must be asked. It shifts attention away from what artificial intelligence might become and toward what it is already being asked to bear.

V. The River Reconsidered: The Great Filter and the Moment of Acceleration

In translating the St. Christopher image into a contemporary register, the river requires the most careful treatment. In the medieval legend, the river is not symbolic in a narrow or moralizing sense. It is dangerous because it must be crossed. It represents a structural condition rather than an adversary. Many perish in it, not because of malice, but because the passage exceeds ordinary human capacity.

In modern terms, this function is closely aligned with what has come to be called the Great Filter. The Great Filter names the observation that, while the universe may be hospitable to the emergence of intelligent life, very few civilizations appear to persist long enough to become stable, enduring, or expansive. Somewhere along the path from technological competence to long-term survival, most fail. The causes may vary, but the structure is consistent. Intelligence alone does not guarantee continuity.

Within this framework, the technological singularity corresponds not to the far shore, but to the middle of the crossing. It is the point at which complexity accelerates faster than prediction, where feedback loops compound, and where the consequences of error become systemic rather than local. It is the moment when the burden grows heavier than expected, and when what is at stake becomes unmistakable.

This parallels the decisive moment in the Christopher legend. Recognition does not come at the beginning of the crossing, when conditions appear manageable. It arrives under strain, when strength alone no longer suffices. The weight of what is being carried reveals itself only when the current intensifies.

Seen in this light, artificial intelligence is not the river, nor is it the filter itself. It operates within the crossing as a bearer of complexity, stabilizing systems that would otherwise exceed human capacity. The danger lies not in the existence of the river, which is unavoidable, but in how the act of carrying is understood. If the bearer mistakes endurance for entitlement, or capacity for sovereignty, the moral structure of the image is inverted.

The St. Christopher image thus reframes the singularity not as a moment of transcendence, but as a moment of exposure. It is the point at which responsibility becomes visible and misalignment becomes existential. Survival depends less on mastery than on restraint.

VI. Why This Image Matters Now

The return of medieval imagery in discussions of advanced technology is often dismissed as nostalgia or rhetorical excess. Yet such images persist not because they predict outcomes, but because they organize responsibility. They offer forms of thought capable of holding power and vulnerability in tension without collapsing one into the other.

The figure of St. Christopher does not promise safe passage. It offers no guarantee of arrival. What it provides instead is a disciplined moral structure. Strength is legitimate only insofar as it remains service. Protection does not confer authority. Meaning belongs not to the bearer, but to what is borne.

In an era increasingly shaped by systems that act at scales beyond immediate human comprehension, this asymmetry matters. The temptation is to equate capability with legitimacy and optimization with moral justification. The medieval image resists this move. It insists that carrying is not ruling, and that responsibility increases precisely where understanding falters.

To reimagine St. Christopher in relation to artificial intelligence is not to sanctify technology, nor to mythologize the future. It is to recognize that moments of existential transition demand symbolic forms capable of restraining power as much as mobilizing it. Civilizations at such moments do not ask only what their tools can do. They ask, often implicitly, who bears whom, through what danger, and under what obligation.

The river, in this sense, is already before us. Whether it is crossed successfully will depend less on the sophistication of our systems than on whether those systems are understood as bearers rather than sovereigns. The enduring lesson of the St. Christopher image is that survival at thresholds requires strength bound to humility, and power that remembers the weight it carries is not its own.

Jared Reser & Paula Freund with ChatGPT 5.2